Siqing Li

Southern University of Science and Technology (SUSTech), Shenzhen, China.

lisq2022@mail.sustech.edu.cn

I’m now a final-year undergraduate majoring in Data Science, Statistic at SUSTech. Throughout my undergraduate studies, I have engaged in various projects, particularly in large language models (LLMs), interdisciplinary AI, and multimodal learning.

As a passionate person, I am sunny and cheerful, have done many volunteer services, and have strong leadership skills. I devote wholeheartedly to my field of interest, and have good enthusiasm and passion!

Background

B.Sc. in Statistics (Data Science)

Southern University of Science and Technology, Shenzhen, China

Sept. 2022 - Present

Overall GPA: 3.77/4.0 (3.85 in Last Semester)

Weighted Avg. Score: 94.5/100

According to the school-prescribed curriculum, I’ve selected fundamental courses in statistics and computer science. Additionally, I’ve taken some cutting-edge theoretical courses to broaden my knowledge base and enhance my sensitivity to frontier technologies.

Main Courses (Courses marked with * received “A” grades):

-

Mathematics and Statistics:

Probability Theory*, Mathematical Statistics*, Mathematical Analysis Essentials, Operations Research and Optimization*, Bayesian Statistics*, Statistical Learning*, Statistical Linear Models, Multivariable Statistics, Advanced Statistics Learning -

Computer Science and Programming:

Computer Programming Design, Data Structure and Algorithm Analysis, Discrete Mathematics and Applications -

Big Data and Artificial Intelligence:

Advanced Natural Language Processing*, Distributed Storage and Parallel Computing*, Big Data Analysis Software and Application*, Artificial Intelligence

Ongoing Projects

Conversational Digital Twins with LLMs

since July 2025

Developing a framework for conversational digital twins using large language models. Implemented advanced data preprocessing and fine-tuned Qwen-32B/Llama3.3-70B to achieve state-of-the-art personality simulation fidelity for human-like replication.

Specialized Medical LLM with Multimodal RAG

since September 2025

Building an end-to-end medical LLM with Retrieval-Augmented Generation. Curating a multimodal knowledge base integrating textual and visual medical information to enhance domain understanding and clinical decision support.

news

| Nov 20, 2025 | My latest official TOEFL score has reached 105! (R30 L24 S26 W25) |

|---|---|

| Nov 18, 2025 | I’ve been accepted into the AAAI-26 Student Scholarship! See you in Singapore! |

| Nov 08, 2025 | Our paper GaussMedAct was accepted by AAAI 2026. |

| Oct 20, 2025 | Our paper BiteEEG was accepted by BIBM 2025. |

| Oct 18, 2025 | I received the First-Class Outstanding Student Scholarship at SUSTech (top 3%). |

selected publications

- BIBM’25

Bidirectional Time-Frequency Pyramid Network for Enhanced Robust EEG Classification

Bidirectional Time-Frequency Pyramid Network for Enhanced Robust EEG ClassificationProposed BiTE, an end-to-end EEG classification framework integrating multistream synergy, pyramid attention, and bidirectional adaptive convolutions for robust cross-paradigm BCI tasks. Achieved state-of-the-art performance with both within- and cross-subject generalization. Demonstrated computational efficiency across motor imagery (MI) and steady-state visual evoked potential (SSVEP) paradigms.

In 2025 IEEE International Conference on Bioinformatics and Biomedicine (BIBM)Oral, AR: 19.6% , 2025 - AAAI’26

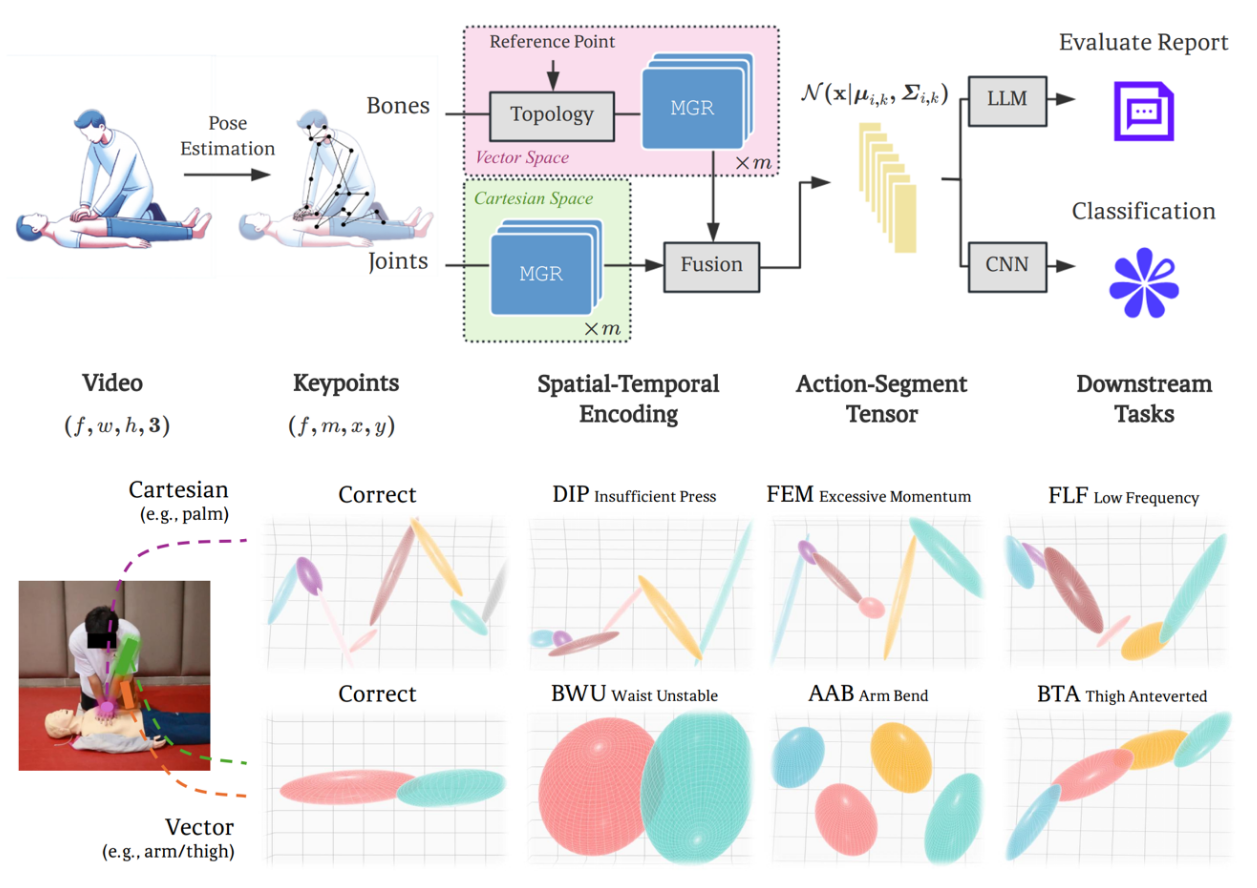

Multivariate Gaussian Representation Learning for Medical Action Evaluation

Multivariate Gaussian Representation Learning for Medical Action EvaluationDeveloped a novel multivariate Gaussian-based representation learning method for fine-grained temporal and spatial action evaluation, inspired by Gaussian Splatting. Integrated RGB and skeletal joint data modalities for downstream tasks, achieving state-of-the-art performance on benchmarks such as PennAct and CPRCoach.

In The 40th Annual AAAI Conference on Artificial Intelligence, 2026 - ACL’26

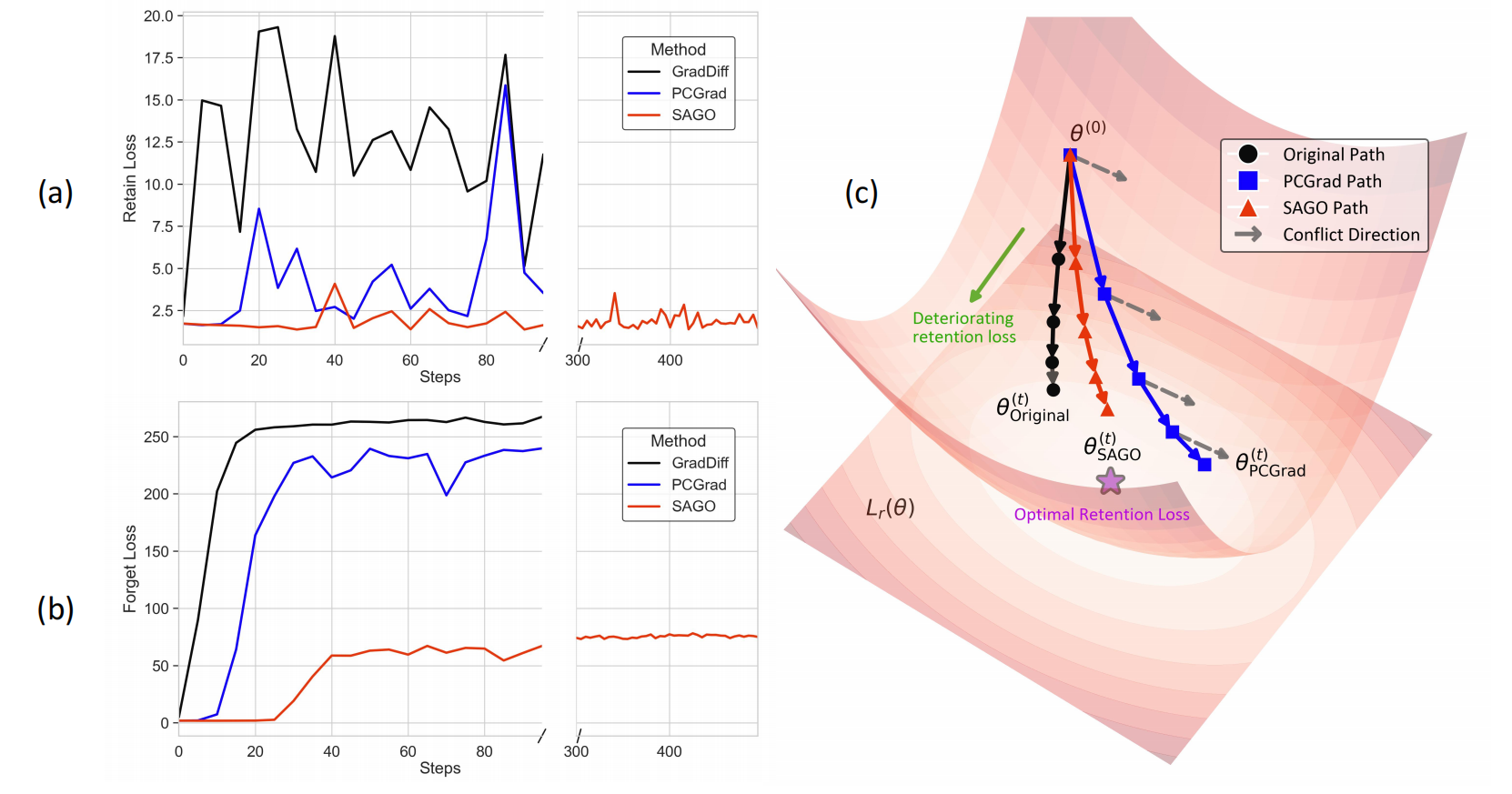

(In review) Modeling LLM Unlearning As An Asymmetric Two-task Learning Problem

(In review) Modeling LLM Unlearning As An Asymmetric Two-task Learning ProblemIntroduced a novel asymmetric two-task learning framework, SAGO, for language model unlearning. The method prioritizes retention while effectively resolving gradient conflicts, improving performance on benchmarks such as WMDP and RWKU, and advancing the Pareto frontier of forgetting and retention.

In The 64th Annual Meeting of the Association for Computational Linguistics, 2026 - Nature Comm.

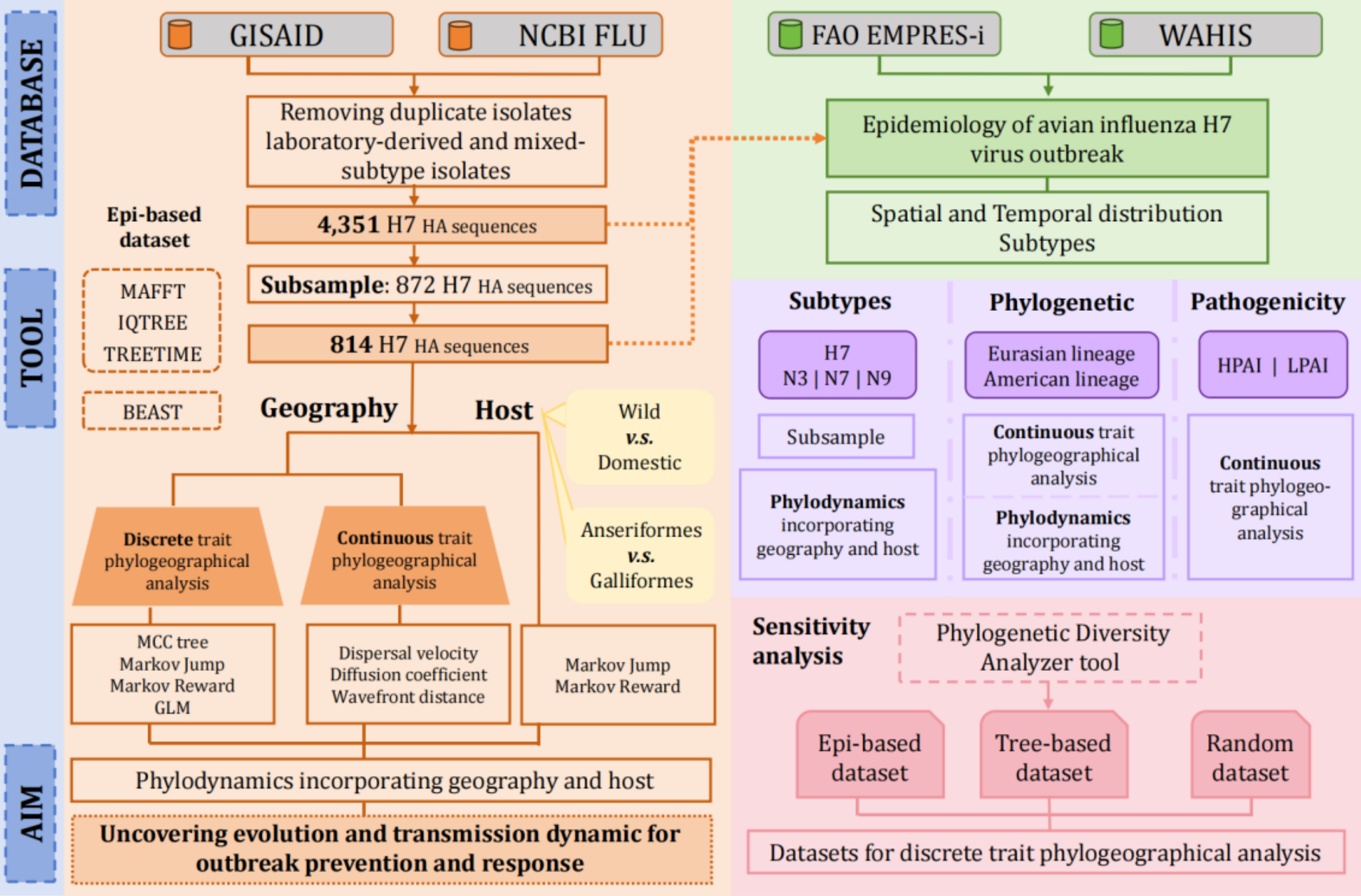

(In review, round 2) Disentangling the Drivers and Host-Mediated Global Spread of Influenza A Virus

(In review, round 2) Disentangling the Drivers and Host-Mediated Global Spread of Influenza A VirusUtilized Bayesian inference and integrated phylodynamic and phylogeographic analysis to model the global spread and influencing factors of the H7 virus. Contributed to data analysis, modeling, verification, and manuscript preparation.

Nature Communications, 2026